澳大利亚国立大学博士/联合奖学金 (ANU-CSC Scholarship)

01-01

欢迎申请澳大利亚国立大学博士/联合奖学金 (ANU-CSC Scholarship)

和行业一流研究人员一同学习工作

免学费,提供每年2万澳元以上生活费

提供旅费参加国际顶尖会议

ANU: Australian National University 澳大利亚国立大学

CSC: 国家留学基金委

导师:

Yi Li: http://users.cecs.anu.edu.au/~yili

Xinhua Zhang: http://users.cecs.anu.edu.au/~xzhang

研究方向:计算机视觉 (Computer Vision, CV),机器学习 (Machine Learning, ML)

邮件联系:

yi.li@cecs.anu.edu.au; yi.li@nicta.com.au

学校简介:

澳大利亚国立大学坐落在澳大利亚首都堪培拉,是澳大利亚唯一由联邦国会单独立法创立的大学,连续多年在澳大利亚大学排行榜上名列第1,在各世界大学排行榜上多次名列全世界前20。该校的澳大利亚院士人数超过270名,居澳洲所有大学第1;其皇家学会的会员人数占澳大利亚总数的一半;杰出校友包括6名诺贝尔奖得主和2名澳洲总理。

NICTA (澳大利亚国家信息通信技术中心)是澳大利亚致力于信息和通信技术研究的最大机构,为澳大利亚开发产生经济、社会和环境效益的技术。 NICTA 与业界在联合项目方面进行合作,创建新公司并通过博士项目为信息和通信技术行业输送新人才。NICTA 在澳大利亚拥有4个实验室和超过700名员工。

导师简介:

李谊,NICTA高级研究员,ANU客座讲师,于2010年获得美国马里兰大学(University of Maryland at College Park)电气工程博士学位。2008-2010马里兰未来教师奖学金,2008语义视觉机器人竞赛第二名,2006国际手写识别大会最佳论文。发表CVPR, ECCV, PAMI论文多篇。先后参与项目包括生物眼(专利1项),人体识别(论文多篇),图像质量分析等。

CSC奖学金及申请过程简介:

Link: http://students.anu.edu.au/scholarships/gr/off/int/anu-csc.php

CSC 提供生活费(含海外学生健康保险)及一次性往返国际旅费,ANU提供学费。申请时,需先获得ANU录取通知,然后凭此申请CSC奖学金。所以请尽早向 ANU 提出申请。

CSC 具体资助金额、申请条件、步骤,请参照:

http://www.csc.edu.cn/

去年ANU细则:

http://www.csc.edu.cn/uploads/project2013/10032.doc

博士申请过程:

1. ANU申请过程介绍可见

http://cecs.anu.edu.au/future_students/graduate/research/application

2. 联系导师:

o 所有申请人均需要联系ANU/NICTA的导师,导师将给ANU和CSC发推荐信,支持申请人。所以请尽快联系!

o 请发送如下

a. 简历,包括论文及获奖经历等。

b. 成绩单

c. 英语成绩

3. 根据具体情况,我们将会通过视频,互相了解情况

4. 提交申请.

5. 成功获得免学费录取后,往CSC 提交材料,申请生活费. ANU在国际排名靠前,一般情况下,获得ANU免学费的申请人CSC都会批准生活费。

研究课题简介

1: Deep Learning for Computer Vision 用于计算机视觉的深度学习方法

传统的计算机视觉依赖于定制的特征,例如边缘和轮廓。但是在最近的几十年,大规模的视觉数据让研究人员认识到学习视觉特征的重要性。在最近的顶级会议和期刊上,深度学习逐渐成为一个非常可行的解决方案。

深度学习是一个非常活跃的研究领域,最近的研究表明深度学习方法的准确率超越了很多的经典方法。做为一个特征选择的一个有效工具,深度学习将在计算机视觉领域扮演重要角色。最近的一些工具,包括Theano等,让大规模的深度学习成为可能。

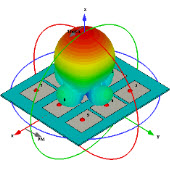

因此,我们将结合机器学习,图像信号处理,和计算机视觉,对深度学习展开各种探索。现阶段主要的研发对象是卷积神经网络在识别和分析上的应用。主要的方向为 1) 监督和非监督方法在视觉问题的应用, 2) 深度学习软硬件的研发, 3)大规模视觉问题的实践解决方法。

Dated back to 1950s, deep learning surfaces as an active area of research in big data era. It emerges as a compelling method for learning hierarchical features, which is critical for many real world computer vision tasks. Recent developments in deep learning include a family of algorithms and a set of tools, with an emphasis on large scale problems.

Deep learning aims at learning hierarchical feature representations. With those enormous labeled data, it is possible to train large and deep networks on big vision datasets. Such deep networks steadily outperformed classic approaches in recent studies (e.g., recognition in ImageNet). Our work places itself at the junction of many different areas including machine learning and signal processing, but with a special focus on computer vision. It seeks to enable visual knowledge discovery using area-specific expertise and cross-understanding for computer vision problems.

Deep learning in computer vision is a broad area, ranging from learning methods (Convolutional Neural Net, Deep Belief Network, etc), to software and tools (notably Theano), to hardware implementations and vision sensors. We will explore the following areas: 1) Supervised and unsupervised algorithms in computer vision, 2) Deep learning hardware and software architectures, 3) Large scale computer vision problems including object recognition, scene analysis, industrial and medical applications.

2. Human Pose Estimation 人体姿态识别

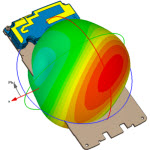

从机器人到视频搜索,人体姿态识别在计算机视觉中有着重要的作用。本方向主要的目的是在图像或者视频里面定位人体各个部分(手,脚,头,等)的具体位置,从而为进一步分析人体的行为打下坚实基础。

基本而言,人体姿态识别属于变形模型( deformable model)这个非常有趣的方向。和简单的检测工作不一样,人体姿势识别需要概率图模型来刻画不同部分之间的相对位置和关系。这也属于一个结构化预测( Structure prediction )的领域。

主要的应用包括用于人性机器人的视觉组件,不规则活动的视频检测,特征动作视频搜索,人类相互行为的视觉模拟,等。

3: Large Scale Road Scene Analysis大规模道路图像分析与识别

计算机视觉在道路图像中扮演重要角色。我们已经获取了澳大利亚大部分道路的图像,构建了一个超大规模的数据集。这是一个非常有影响力的领域,在自动驾驶,三维图像建模,数字城市等方面都有广泛的应用。

主要的难点在于数据集的规模,以及数据的多样化。晴天,阴天,海边,山间,这些都是构成图像分析困难的因素。任何一个突破都能对该方向的发展起到极大的作用。

4: Large Scale Optimization for Sparse Modeling

At the heart of many machine learning algorithms is the problem of convex optimization. Popular models include support vector machine, lasso, and MAP inference in graphical model. With the advent of big data, conventional optimization methods have become computationally exorbitant, and therefore *specialized* algorithms which restore efficiency have recently received intensive study. In the past five years, premier publication venues of machine learning (eg ICML, NIPS, and JMLR) have witnessed a substantial amount of effort devoted to large scale optimization.

Arguably scalable algorithms rely on refined exploitation of the structures in the problem, such as decomposability of loss, sparsity or low-rankness of the optimal model, and efficient approximations of convex bodies or functions. This has led to highly efficient algorithms for machine learning, such as stochastic optimization, sparse greedy methods, and smoothing techniques. Further acceleration has been enabled by recent advances in computing paradigms such as cluster and cloud. But to fully unleash their power, optimization algorithms need to be customized at a system level, which also creates novel opportunities and challenges in research.

This project aims to develop novel efficient optimization algorithms for advanced sparse models, which have recently loomed and gained popularity in machine learning. Leveraging techniques from discrete optimization, convex analysis, and statistics, it will have a significant impact on a broad range of large-scale applications such as collaborative filtering and computer vision. Example research topics include, but not limited to:

1. Stochastic and parallel optimization for low-rank models (eg trace norm regularization) with global optimality;

2. Non-convex relaxations of discrete optimization which admit decomposable structures for improved local solution;

3. Novel sparse and dictionary learning models (eg for tensors, nonnegative matrix factorization) that are amenable to efficient global optimization.

For more details: http://cecs.anu.edu.au/projects/pid/0000000977

5: Convex Relaxation for Latent Models

Data representations and automated feature discovery are fundamental to machine learning. Most existing approaches are built upon latent models that formalize the prior belief on the underlying structure of the data. Popular examples include principal components analysis, mixture models, and restricted Boltzman machine (RBM). Unfortunately learning these models from data usually results in non-convex optimization problems and only locally optimal solutions can be found.

Among many techniques that try to ameliorate the issue of local sub-optimality, convex relaxation is often the state of the art in both theory and practice. For example, semi-definite relaxation for graph max-cut problems. By carefully designing a convex approximation of the problem, it not only enables the application of efficient convex solvers, but also retains salient regularities in the data with provable approximation bound.

Recently a number of novel latent models have witnessed substantial success, such as RBMs and deep networks. However, little is known about how to convexify these models. The goal of this project is to fill this blank by developing convex relaxations for them, in a way that is both tight and efficiently optimizable. This will enable more accurate and scalable learning of these latent models, and will have profound impact on a variety of applications. Example research topics include, but not limited to:

1. Design convex relaxations for RBM and its variants (ie single hidden layer neural net);

2. Study the tightness of relaxation in both theory and practice;

3. Develop convex optimization algorithms that are tailored for the resulting problem.

For more details: http://cecs.anu.edu.au/projects/pid/0000000978

和行业一流研究人员一同学习工作

免学费,提供每年2万澳元以上生活费

提供旅费参加国际顶尖会议

ANU: Australian National University 澳大利亚国立大学

CSC: 国家留学基金委

导师:

Yi Li: http://users.cecs.anu.edu.au/~yili

Xinhua Zhang: http://users.cecs.anu.edu.au/~xzhang

研究方向:计算机视觉 (Computer Vision, CV),机器学习 (Machine Learning, ML)

邮件联系:

yi.li@cecs.anu.edu.au; yi.li@nicta.com.au

学校简介:

澳大利亚国立大学坐落在澳大利亚首都堪培拉,是澳大利亚唯一由联邦国会单独立法创立的大学,连续多年在澳大利亚大学排行榜上名列第1,在各世界大学排行榜上多次名列全世界前20。该校的澳大利亚院士人数超过270名,居澳洲所有大学第1;其皇家学会的会员人数占澳大利亚总数的一半;杰出校友包括6名诺贝尔奖得主和2名澳洲总理。

NICTA (澳大利亚国家信息通信技术中心)是澳大利亚致力于信息和通信技术研究的最大机构,为澳大利亚开发产生经济、社会和环境效益的技术。 NICTA 与业界在联合项目方面进行合作,创建新公司并通过博士项目为信息和通信技术行业输送新人才。NICTA 在澳大利亚拥有4个实验室和超过700名员工。

导师简介:

李谊,NICTA高级研究员,ANU客座讲师,于2010年获得美国马里兰大学(University of Maryland at College Park)电气工程博士学位。2008-2010马里兰未来教师奖学金,2008语义视觉机器人竞赛第二名,2006国际手写识别大会最佳论文。发表CVPR, ECCV, PAMI论文多篇。先后参与项目包括生物眼(专利1项),人体识别(论文多篇),图像质量分析等。

CSC奖学金及申请过程简介:

Link: http://students.anu.edu.au/scholarships/gr/off/int/anu-csc.php

CSC 提供生活费(含海外学生健康保险)及一次性往返国际旅费,ANU提供学费。申请时,需先获得ANU录取通知,然后凭此申请CSC奖学金。所以请尽早向 ANU 提出申请。

CSC 具体资助金额、申请条件、步骤,请参照:

http://www.csc.edu.cn/

去年ANU细则:

http://www.csc.edu.cn/uploads/project2013/10032.doc

博士申请过程:

1. ANU申请过程介绍可见

http://cecs.anu.edu.au/future_students/graduate/research/application

2. 联系导师:

o 所有申请人均需要联系ANU/NICTA的导师,导师将给ANU和CSC发推荐信,支持申请人。所以请尽快联系!

o 请发送如下

a. 简历,包括论文及获奖经历等。

b. 成绩单

c. 英语成绩

3. 根据具体情况,我们将会通过视频,互相了解情况

4. 提交申请.

5. 成功获得免学费录取后,往CSC 提交材料,申请生活费. ANU在国际排名靠前,一般情况下,获得ANU免学费的申请人CSC都会批准生活费。

研究课题简介

1: Deep Learning for Computer Vision 用于计算机视觉的深度学习方法

传统的计算机视觉依赖于定制的特征,例如边缘和轮廓。但是在最近的几十年,大规模的视觉数据让研究人员认识到学习视觉特征的重要性。在最近的顶级会议和期刊上,深度学习逐渐成为一个非常可行的解决方案。

深度学习是一个非常活跃的研究领域,最近的研究表明深度学习方法的准确率超越了很多的经典方法。做为一个特征选择的一个有效工具,深度学习将在计算机视觉领域扮演重要角色。最近的一些工具,包括Theano等,让大规模的深度学习成为可能。

因此,我们将结合机器学习,图像信号处理,和计算机视觉,对深度学习展开各种探索。现阶段主要的研发对象是卷积神经网络在识别和分析上的应用。主要的方向为 1) 监督和非监督方法在视觉问题的应用, 2) 深度学习软硬件的研发, 3)大规模视觉问题的实践解决方法。

Dated back to 1950s, deep learning surfaces as an active area of research in big data era. It emerges as a compelling method for learning hierarchical features, which is critical for many real world computer vision tasks. Recent developments in deep learning include a family of algorithms and a set of tools, with an emphasis on large scale problems.

Deep learning aims at learning hierarchical feature representations. With those enormous labeled data, it is possible to train large and deep networks on big vision datasets. Such deep networks steadily outperformed classic approaches in recent studies (e.g., recognition in ImageNet). Our work places itself at the junction of many different areas including machine learning and signal processing, but with a special focus on computer vision. It seeks to enable visual knowledge discovery using area-specific expertise and cross-understanding for computer vision problems.

Deep learning in computer vision is a broad area, ranging from learning methods (Convolutional Neural Net, Deep Belief Network, etc), to software and tools (notably Theano), to hardware implementations and vision sensors. We will explore the following areas: 1) Supervised and unsupervised algorithms in computer vision, 2) Deep learning hardware and software architectures, 3) Large scale computer vision problems including object recognition, scene analysis, industrial and medical applications.

2. Human Pose Estimation 人体姿态识别

从机器人到视频搜索,人体姿态识别在计算机视觉中有着重要的作用。本方向主要的目的是在图像或者视频里面定位人体各个部分(手,脚,头,等)的具体位置,从而为进一步分析人体的行为打下坚实基础。

基本而言,人体姿态识别属于变形模型( deformable model)这个非常有趣的方向。和简单的检测工作不一样,人体姿势识别需要概率图模型来刻画不同部分之间的相对位置和关系。这也属于一个结构化预测( Structure prediction )的领域。

主要的应用包括用于人性机器人的视觉组件,不规则活动的视频检测,特征动作视频搜索,人类相互行为的视觉模拟,等。

3: Large Scale Road Scene Analysis大规模道路图像分析与识别

计算机视觉在道路图像中扮演重要角色。我们已经获取了澳大利亚大部分道路的图像,构建了一个超大规模的数据集。这是一个非常有影响力的领域,在自动驾驶,三维图像建模,数字城市等方面都有广泛的应用。

主要的难点在于数据集的规模,以及数据的多样化。晴天,阴天,海边,山间,这些都是构成图像分析困难的因素。任何一个突破都能对该方向的发展起到极大的作用。

4: Large Scale Optimization for Sparse Modeling

At the heart of many machine learning algorithms is the problem of convex optimization. Popular models include support vector machine, lasso, and MAP inference in graphical model. With the advent of big data, conventional optimization methods have become computationally exorbitant, and therefore *specialized* algorithms which restore efficiency have recently received intensive study. In the past five years, premier publication venues of machine learning (eg ICML, NIPS, and JMLR) have witnessed a substantial amount of effort devoted to large scale optimization.

Arguably scalable algorithms rely on refined exploitation of the structures in the problem, such as decomposability of loss, sparsity or low-rankness of the optimal model, and efficient approximations of convex bodies or functions. This has led to highly efficient algorithms for machine learning, such as stochastic optimization, sparse greedy methods, and smoothing techniques. Further acceleration has been enabled by recent advances in computing paradigms such as cluster and cloud. But to fully unleash their power, optimization algorithms need to be customized at a system level, which also creates novel opportunities and challenges in research.

This project aims to develop novel efficient optimization algorithms for advanced sparse models, which have recently loomed and gained popularity in machine learning. Leveraging techniques from discrete optimization, convex analysis, and statistics, it will have a significant impact on a broad range of large-scale applications such as collaborative filtering and computer vision. Example research topics include, but not limited to:

1. Stochastic and parallel optimization for low-rank models (eg trace norm regularization) with global optimality;

2. Non-convex relaxations of discrete optimization which admit decomposable structures for improved local solution;

3. Novel sparse and dictionary learning models (eg for tensors, nonnegative matrix factorization) that are amenable to efficient global optimization.

For more details: http://cecs.anu.edu.au/projects/pid/0000000977

5: Convex Relaxation for Latent Models

Data representations and automated feature discovery are fundamental to machine learning. Most existing approaches are built upon latent models that formalize the prior belief on the underlying structure of the data. Popular examples include principal components analysis, mixture models, and restricted Boltzman machine (RBM). Unfortunately learning these models from data usually results in non-convex optimization problems and only locally optimal solutions can be found.

Among many techniques that try to ameliorate the issue of local sub-optimality, convex relaxation is often the state of the art in both theory and practice. For example, semi-definite relaxation for graph max-cut problems. By carefully designing a convex approximation of the problem, it not only enables the application of efficient convex solvers, but also retains salient regularities in the data with provable approximation bound.

Recently a number of novel latent models have witnessed substantial success, such as RBMs and deep networks. However, little is known about how to convexify these models. The goal of this project is to fill this blank by developing convex relaxations for them, in a way that is both tight and efficiently optimizable. This will enable more accurate and scalable learning of these latent models, and will have profound impact on a variety of applications. Example research topics include, but not limited to:

1. Design convex relaxations for RBM and its variants (ie single hidden layer neural net);

2. Study the tightness of relaxation in both theory and practice;

3. Develop convex optimization algorithms that are tailored for the resulting problem.

For more details: http://cecs.anu.edu.au/projects/pid/0000000978

相关文章:

- 奥斯陆大学通信方向招博士博士后以及奖学金信息(05-08)

- 请那位达人赐一个博士论文题目吧,头大啊(05-08)

- Re: 博士研究方向,请大家给点意见(05-08)

- 博士选方向,请教(05-08)

- 物理层的东东现在有什么方向可做?做博士。呵呵。(05-08)

- 光学博士毕业想转行做个通信博后可以吗?(05-08)

射频专业培训教程推荐